Following Telegram CEO Pavel Durov’s arrest in France, the implications for digital platforms and content moderation are brought to light. This high-profile case has thrust Telegram’s controversial approach to child safety into the spotlight, raising critical questions about the responsibilities of tech companies in combating child sexual abuse material (CSAM). IT professionals or decision-makers in the Asia Pacific region must now confront the stark realities of inadequate content regulation and its potential consequences. Explore Telegram’s child safety crisis and examine the ignored warnings, regulatory fallout, and the urgent need for proactive measures. Especially in ensuring user safety in the digital landscape.

Telegram’s Controversial Stance on Content Moderation

Minimal Intervention Policy

- Telegram has long maintained a controversial approach to content moderation, emphasizing user privacy and minimal intervention. This stance has drawn sharp criticism from child safety advocates and law enforcement agencies worldwide. The platform’s end-to-end encryption and self-destructing messages, while praised for protecting user privacy, have become a double-edged sword in the fight against child sexual abuse material (CSAM).

Telegram’s Lack of Cooperation with Authorities

- One of the most contentious aspects of Telegram’s policy is its reluctance to collaborate with law enforcement agencies. Unlike many other social media platforms, Telegram has been accused of failing to respond adequately to requests for information related to CSAM investigations. This lack of cooperation has frustrated efforts to track down and prosecute offenders, potentially leaving vulnerable children at risk.

Inadequate Reporting Mechanisms

- Critics argue that Telegram’s reporting mechanisms for CSAM and other illegal content are insufficient. The platform’s decentralized structure and emphasis on user anonymity make it challenging to implement robust content moderation practices. This has led to concerns that Telegram may be inadvertently providing a haven for individuals seeking to distribute or access CSAM, undermining global efforts to combat this serious issue.

Advocacy Groups Sound Alarm on Child Safety Issues

Telegram’s Repeated Warnings from Child Protection Organizations

- Child safety advocacy groups have long been raising red flags about Telegram’s inadequate measures to combat child sexual abuse material (CSAM). Organizations like the National Center for Missing and Exploited Children (NCMEC) have repeatedly voiced concerns over the platform’s lax approach to content moderation and its reluctance to cooperate with law enforcement agencies.

- These groups argue that Telegram’s emphasis on user privacy and encryption, while important, has inadvertently created a haven for predators and distributors of CSAM. The platform’s refusal to implement robust content filtering mechanisms or to actively participate in industry-wide efforts to detect and report CSAM has drawn sharp criticism from child protection experts.

Calls for Increased Accountability and Transparency

Advocacy organizations are demanding greater accountability from Telegram, urging the company to:

Implement more stringent content moderation policies

Collaborate more closely with law enforcement agencies

Increase transparency regarding their efforts to combat CSAM

These groups stress that Telegram’s current stance not only puts children at risk but also undermines global efforts to eradicate online child exploitation. They argue that the platform’s massive user base and global reach amplify the potential harm, making it crucial for Telegram to take immediate and decisive action to address these serious child safety concerns.

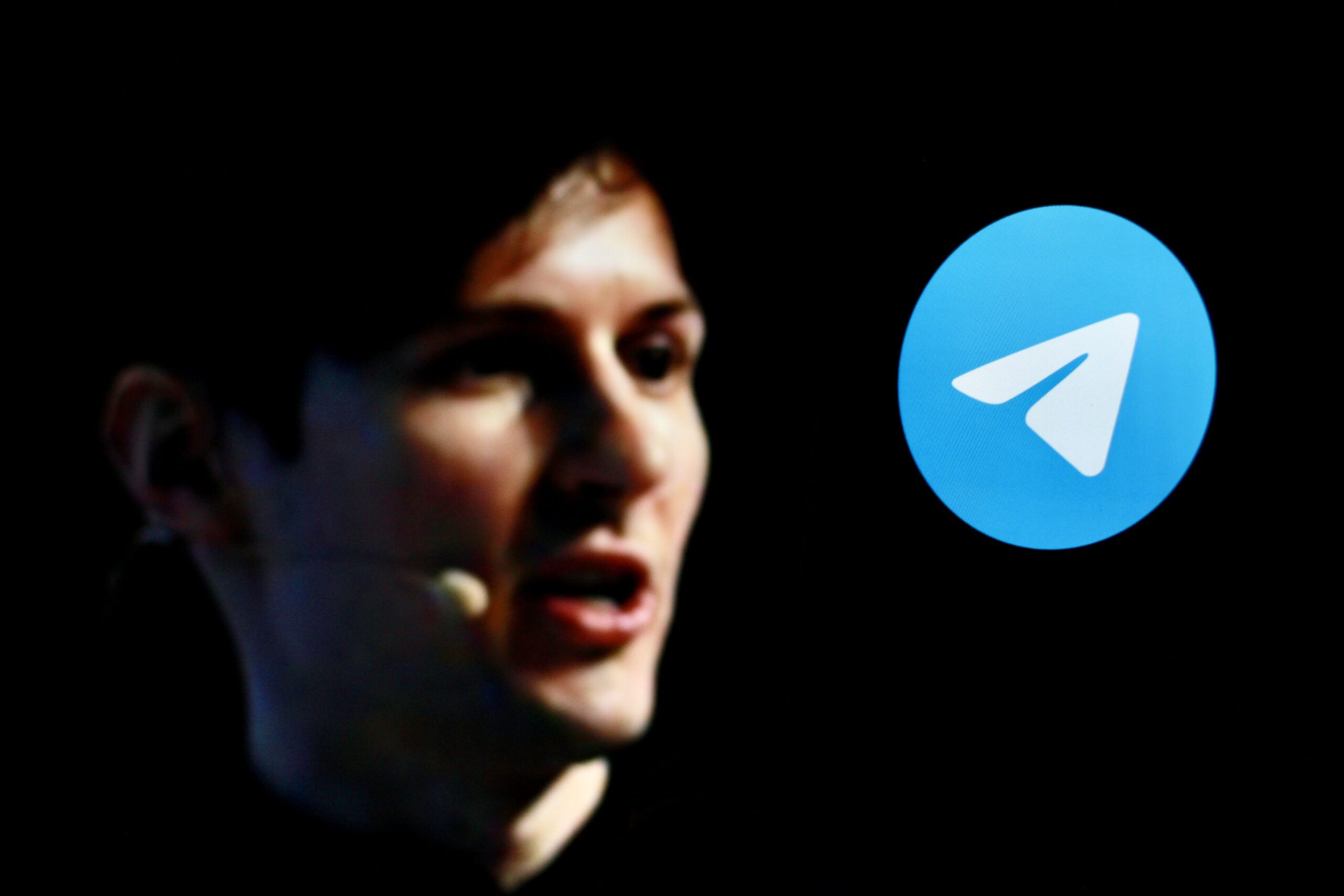

Arrest of Telegram’s CEO Highlights Platform’s Noncompliance

Telegram’s Turning Point: Durov’s Arrest

- The recent arrest of Pavel Durov, Telegram’s CEO, in France marks a critical juncture in the ongoing debate surrounding digital platforms’ responsibility in moderating content. This high-profile incident has thrust Telegram’s controversial stance on content moderation into the spotlight, particularly concerning its handling of child sexual abuse material (CSAM).

A History of Warnings Ignored

For years, child safety advocacy groups, including the National Center for Missing and Exploited Children (NCMEC), have raised alarms about Telegram’s lax approach to combating CSAM. Despite these repeated warnings, the platform has consistently refused to:

Collaborate effectively with law enforcement agencies

Implement robust content moderation practices

Engage in proactive measures to identify and remove CSAM

Implications for the Tech Industry

Durov’s arrest serves as a wake-up call for IT professionals and decision-makers, especially in the Asia Pacific region. It underscores the critical importance of:

Implementing strict content regulation policies

Adopting proactive measures to ensure user safety

Fostering a culture of responsibility and accountability in digital platforms

As regulatory scrutiny intensifies, companies must prioritize user protection or risk facing severe consequences, both legal and reputational.

The Need for Strict Content Regulation on Digital Platforms

Protecting Vulnerable Users

- In the wake of Telegram’s child safety crisis, the imperative for stringent content regulation on digital platforms has never been clearer. As IT professionals and decision-makers in the Asia Pacific region, you must recognize that robust moderation practices are not just ethical obligations but crucial safeguards against legal and reputational risks. The Telegram case serves as a stark reminder that platforms prioritizing user privacy must balance this with equally rigorous child protection measures.

Implementing Proactive Measures

- To ensure user safety, you should advocate for and implement proactive content monitoring systems. This includes leveraging advanced AI and machine learning algorithms to detect and flag potentially harmful content before it reaches users. Additionally, establishing clear reporting mechanisms and rapid response protocols for addressing user concerns is essential. By taking these steps, you can create a safer digital environment while maintaining user trust.

Collaboration with Law Enforcement

- The Telegram incident underscores the importance of cooperation between digital platforms and law enforcement agencies. As industry leaders, you must foster open lines of communication with relevant authorities, facilitating swift action against illegal content and its perpetrators. This collaboration not only aids in combating CSAM but also demonstrates your commitment to corporate social responsibility, potentially mitigating regulatory scrutiny and enhancing your platform’s reputation in the long run.

Telegram’s Controversy: Implementing Robust Safeguards for User Protection

In light of Telegram’s recent controversies, IT professionals and decision-makers must prioritize user safety on digital platforms. Implementing robust safeguards is no longer optional—it’s a necessity in today’s digital landscape.

Proactive Content Moderation

- To combat the spread of harmful content, including CSAM, platforms must adopt proactive content moderation strategies. This involves leveraging advanced AI and machine learning algorithms to detect and flag potentially abusive material before it reaches users. Additionally, human moderators should be employed to review flagged content, ensuring a balance between automated systems and human judgment.

Collaboration with Law Enforcement

- Establishing strong partnerships with law enforcement agencies is paramount. Platforms should develop clear protocols for responding to legal requests and reporting suspicious activities. This cooperation not only aids in investigations but also serves as a deterrent to potential offenders.

User Empowerment and Education

- Empowering users through robust reporting mechanisms and educational initiatives is essential. Platforms should provide easily accessible tools for users to report concerning content or behavior. Furthermore, implementing comprehensive safety guides and in-app notifications can help educate users about potential risks and best practices for safe online interactions.

Telegram’s Controversy Highlights Regular Security Audits

- Conducting frequent security audits and vulnerability assessments is crucial to identify and address potential weaknesses in the platform’s safety measures. These audits should encompass both technical infrastructure and policy effectiveness, ensuring a holistic approach to user protection.

Summing It Up

As you navigate the complex landscape of digital communication platforms, it’s crucial to remain vigilant about the safety measures and regulatory compliance of the tools you choose. Telegram’s ongoing child safety crisis serves as a stark reminder of the potential consequences of inadequate content moderation and resistance to law enforcement cooperation. When selecting messaging platforms for your organization, prioritize those with robust safety protocols and a demonstrated commitment to user protection. By doing so, you not only safeguard your users but also mitigate potential legal and reputational risks. In an era of increasing scrutiny on digital platforms, proactive measures to ensure user safety are no longer optional—they’re essential.

More Stories

IBM and Oracle Forge AI-Driven Hybrid Cloud Alliance

IBM and Oracle formed a strategic alliance to integrate AI solutions within hybrid cloud environments. This collaboration focuses on merging IBM’s advanced watsonx AI tools with Oracle’s strong Cloud Infrastructure.

Kakao Plans Advanced AI-Ready Data Center in Gyeonggi to Boost Service Resilience

The company will build an advanced AI-ready data center in Namyangju, Gyeonggi Province. Notably, this ambitious project is set for completion by 2028. It responds to key lessons from the October 2022 service disruption.

Google’s Find Hub Gains Precision with UWB Support

Google has revamped its Find My Device network into the newly christened “Find Hub,” now boasting Ultra Wideband (UWB) support. Scheduled for release in May 2025, this update promises to revolutionize the precision of tracking lost items, allowing you to pinpoint both the distance and direction of your belongings with unprecedented accuracy.

Alibaba’s ZeroSearch Cuts AI Training Costs by 88% Without External APIs

As businesses aim to use AI while staying within budget, ZeroSearch offers a vital and timely solution. Moreover, it delivers both cost savings and better model performance.

Quark’s Deep Search: Alibaba’s AI App Redefines China’s Search Landscape

Alibaba’s Quark AI app is making waves with its groundbreaking “deep search” feature. By harnessing the power of its Qwen AI models, Quark elevates traditional search functions, offering users precise, context-rich answers to intricate questions.

Qwen3: Alibaba’s Open-Source AI Breakthrough Redefining Global Innovation

Alibaba’s Qwen3 emerges as a groundbreaking force, poised to redefine the paradigms of global innovation. As you delve into this open-source marvel, you will discover how Qwen3 is not just another AI model but a sophisticated suite of eight advanced architectures, including the revolutionary Mixture-of-Experts (MoE).